Please, suggest limits and tests to let it work even in other contexts.Lumada Data Integration is ranked 17th in Data Integration Tools with 4 reviews while Palantir Foundry is ranked 16th in Data Integration Tools with 2 reviews. The use of older version should work because no particular features of the DBMS are used. The only exception could be the Pentaho Reporting Output ( ) used for the creation of the static reports inside a transformation and released in the PDI starting from the version 4.1.0 ( ).įor the Pentaho Data Integration Repository has been used PostgreSQL v9.0. The use of older version cannot be guaranteed to be compatible even if the used modules in jobs and transformations are more or less the most common ones.

are developed with Pentaho Data Integration Community Edition v4.3 Stable. are all stored in a DBMS called ‘A.A.A.R._Kettle’ that can be retrieved with the Spoon application of the Pentaho Data Integration Suite ( ).Ī.A.A.R. For the ones of you that doesn’t feel confident with Pentaho Data Integration, you have to know that the E.T.L. are developed as Jobs and Transformations all stored in a Pentaho Data Integration Repository.

Each report can be uploaded in a different Alfresco target and in a different Alfresco path (according to each row in ‘dm_reports’).īoth the E.T.L. Have multiple and customized reports (one for each row in ‘dm_reports’). Have multiple Alfresco sources (one for each row in ‘dm_dim_alfresco’). Talking about the state of the art, it is possible to: Probably we didn’t thought about all the needs in terms of parameters but in the future we could extend them according to the user requests. are developed to work with parameters that are all stored in the DBMS tables and not in the E.T.L. we suggest to read the dedicated chapter ahead in the documentation.īoth the E.T.L. Thanking to the use of Pentaho Data Integration and according to the Data Warehousing techniques, is very easy to get data from the DBMS tables and upload them into the Star Schema defined with dimension tables and fact tables.įor more details about the two developed E.T.L. In the third and last step, the extended audit data and documents/folders informations are uploaded in the Star Schema (representing the Data Mart). The goal is to let the audit data and documents/folders informations ready for the Star Schema insertion. In the second step, the reconciliation of the data, they are “cleaned” from not relevant actions, “enriched” with dynamic informations (for example the description of the days, months, etc.) and “expanded” where they are compacted during the extraction (for example the arrays are exploded in many rows). Regarding the repository composition, the CMIS Input plugin is able to extract all the available informations very easily from inside the Pentaho environment. Thanking to the use of Pentaho Data Integration is very easy to get audit data from a REST call (the Alfresco web script) directly in a DBMS table according to its structure. In the first step, the uploading of the staging area, audit data and documents/folders informations are uploaded in a DBMS table with the same structure of the source. Reconciliation of the audit data and the repository’s structure. Upload of the audit data and repository composition in a staging area. The step are ordered and cannot be executed in parallel. Talking about the first E.T.L., the one that extract Alfresco audit data and repository composition into the Data Mart, the Data Warehousing techniques suggest us to manage the flow of information in steps. will be very easy according to the features of the Pentaho Data Integration ( ). The schedule of the execution of the two E.T.L. are developed with an “incremental” strategy where only the new actions since the last import are extracted in the Data Mart.

To have more lighter and scalable processes, all the E.T.L.

According to the Data Warehousing techniques, the update could be once a day, typically during the night time where the servers work less in average. should be executed periodically to update the Data Mart and, consequentially, the reports in Alfresco.

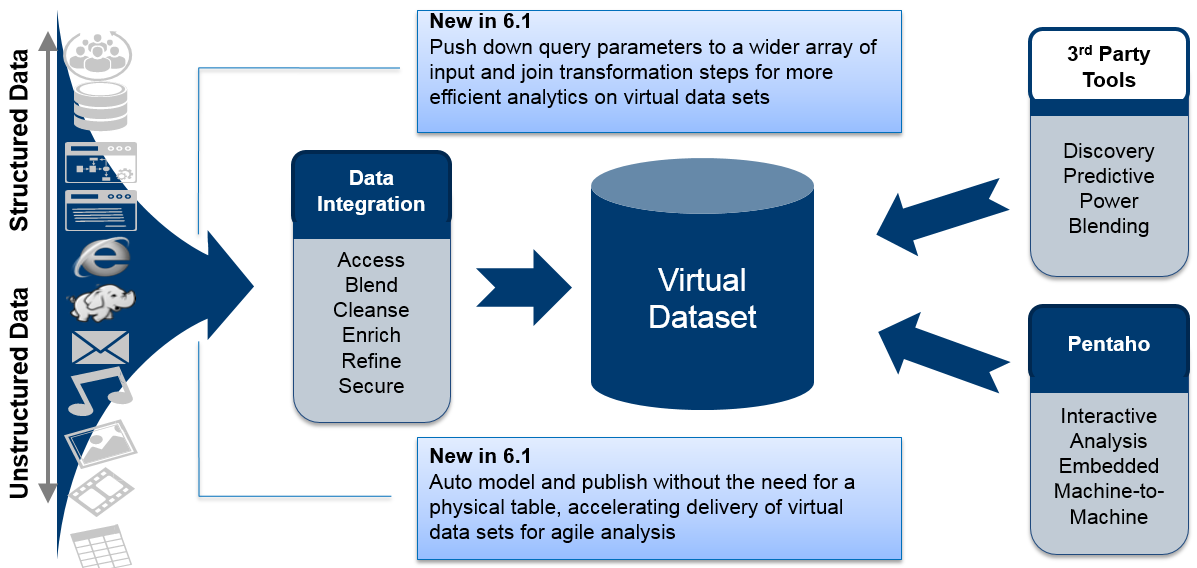

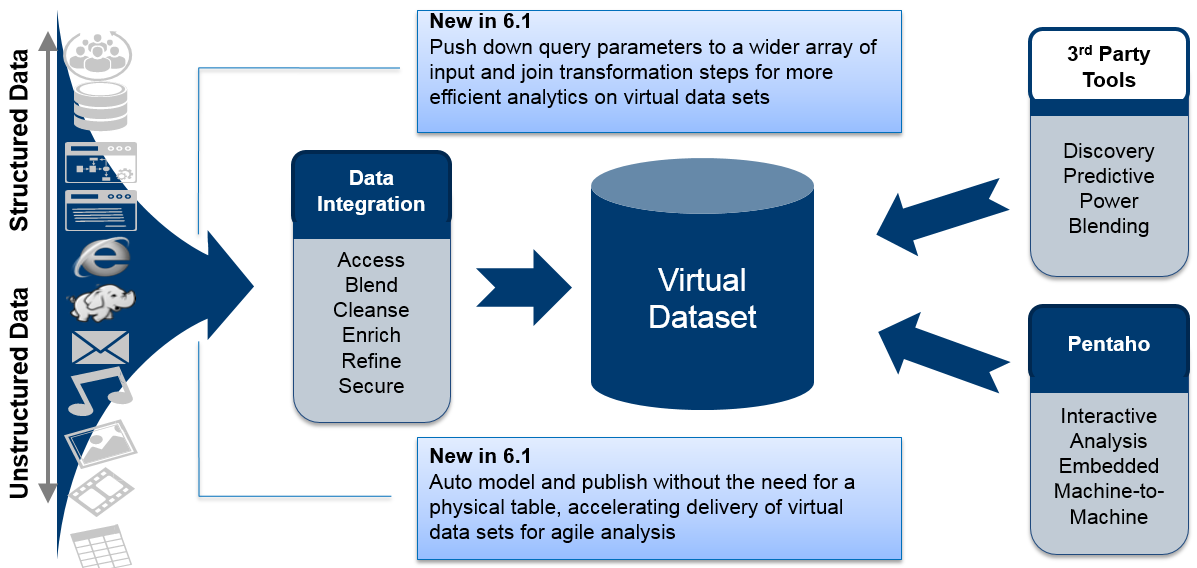

Each user access, each read of content, each creation or modification of the content defines a new audit action that the A.A.A.R. It’s easy to understand that the audit data are constantly created while the Alfresco E.C.M. In particular, Pentaho Data Integration is used to: extract Alfresco audit data and repository composition into the Data Mart and create the defined reports uploading them back to Alfresco.

Pentaho Data Integration ( ) is used in A.A.A.R.

0 kommentar(er)

0 kommentar(er)